Imagine burying broken glass in a forest. In one possible future, a child steps on the glass in 5 years' time, and hurts herself. In a different possible future, a child steps on the glass in 500 years' time, and hurts herself just as much. Longtermism begins by appreciating that both possibilities seem equally bad: why stop caring about the effects of our actions just because they take place a long time from now?

It’s natural to care about future generations: we may all want the lives of our children and grandchildren to go well, even before they are born. As the example above shows, this care could be extended far into the future, beyond even the lives of our grandchildren.

What happens when we no longer limit the scope of our concern to just the next couple of generations? Once we consider how many generations could follow ours, the implications look striking: our actions could influence the lives of far more people than many expect.

Put simply, humanity might last for an incredibly long time. The lifespans of other species suggest there might be hundreds of thousands of years ahead of us, and the Earth will remain habitable for hundreds of millions of years. If human history were a novel, we may still be living on its very first page.

But this isn’t just academically interesting. We might be living through a period in which our decisions today really matter for all of those future generations. On a zoomed-out timescale, we’re living through uniquely rapid change, growth, and technological progress. On one hand, this could present a time of opportunities — for instance, to make sure the values that are instilled in the technologies we build this century benefit everyone, long into the future. On the other hand, this suggests we may be living through a ‘time of perils’ — a period where it may be possible to bring about a catastrophe severe enough to permanently curtail humanity’s potential. Yet, it looks like there are things we can start doing now to make that less likely.

You might agree that future people matter in the abstract. But it’s this point — that our actions today could meaningfully impact their lives — that might compel you to believe that positively influencing the long-term future should be a key moral priority of a time. This is the idea encapsulated by longtermism.

This introduction will explain these motivations behind longtermism in more depth: that future people matter morally; that there could well be an enormous number of them; and that there are things we can do today to help ensure that their lives go well.

Prefer to listen? Try Ezra Klein's New York Times interview with William MacAskill.

Prefer to watch? Try "Orienting towards the long-term future" by Joe Carlsmith.

The "resources" page on this website includes many more talks and podcasts related to longtermism.

Future people matter

Thousands, perhaps millions, of generations might follow ours. But should we care about them?

Suppose an old friend is having a hard time, and wants to speak to you on the phone. You thought they were living in the same country as you, but you learn that they recently travelled to a different continent. Would you be less inclined to help them when you learn that they’re far away from you in space? Of course not.

Many people would agree that a person’s distance from us in space doesn’t affect how much their life matters. As long as it’s just as easy it is to help them, a person’s needs don’t matter less just because they live further away from us.

If people matter equally regardless of where they are born, shouldn’t they also matter equally regardless of when they are born?

Certainly being born earlier rather than later in time isn’t a reason for mattering more. From the perspective of our ancestors, we were once future people, and many generations laid between us and them. Of course, it would be strange to claim that our lives matter less simply because we were born later: our joys and pains are just as real and as important as those of the generations that came before us. But people living far in the future will feel just the same when they compare the value of their lives to ours.

To be sure, there could be reasons to weigh the lives of people close to you in time and space more — perhaps because they are close friends or family, or because their closeness means you find it easier to improve their lives. But it is never the mere fact of their closeness in space or time that matters morally. The example of burying glass in a forest, first described by the late philosopher Derek Parfit, helps illustrate this.

Humanity’s potential is enormous

One reason saving a human life is so valuable is because, in saving the life, you save that person's potential. Short of dying, we might still regret that some people don't get to reach their full potential. For instance, a promising young scientist might not make the breakthroughs she could have made for any number of reasons, like discrimination or lack of encouragement. The greater a person's potential, the more important it seems to protect it and do what we can to make sure it's realised — and the greater the loss if it isn't. But this doesn't just apply to individual people: humanity too can be said to have a potential — the latent possibility of an extraordinary future. Like the promising scientist, we might come very close to collectively achieving that full potential, or we might foreclose almost all of it. The size of our potential therefore matters. The better and more expansive it looks like our future could be, the more important it will be to protect it.

So how many people might live in the future, and how good (or bad) could their lives be? Of course, we can’t get precise estimates, but we can look for clues about our potential: how the future might very plausibly go if things go relatively well. Reflecting on this question suggests that the vast majority of humans may have not yet been born; almost all of what matters most could be enjoyed by generations stretching far beyond even our grandchildren.

To begin with, a typical mammalian species survives for an average of about a million years. The human species, Homo sapiens, has so far lasted for around 300,000 years. Therefore, if humans last roughly as long as most mammals, we might expect to have at least 200,000 years remaining — nearly 1,000 future generations. Of course, humanity is not a typical mammalian species: not least because we have the technological means of preventing and recovering from threats to our own extinction. We could do even better than the standard set by other mammals.

So we might next look to the entire future of Earth. Our planet will likely remain habitable for several hundred million years (before it gets sterilised by the sun). If humanity survived for just 1% of that time, there would be around half a million more generations to come after us. Assuming similar numbers of people lived per-century as in the recent past, that works out to at least a billion billion future human lives — ten thousand times the number of humans who have ever lived to date.

But will humanity stop there? Just 66 years separated the first successful heavier-than-air flight, and humans walking on the Moon. Imagine how much progress we can make on spacefaring technology in a hundred or even a thousand years. Given what we already know, it looks entirely possible for humans to eventually venture beyond Earth. If we choose to travel beyond our planet, the night sky might one day be full of thousands of other stars we call home.

This might sound too much like sci-fi, or too ungrounded in hard evidence. But nobody has a good idea of exactly how the future will look. What matters is that the human future could be extraordinarily large in duration and extent.

The scale of the future can be difficult to comprehend — once numbers get large enough, they tend to all sound the same. To this end, the physicist James Jeans suggested the following metaphor. Imagine a postage stamp on a single coin. If the thickness of the coin and stamp combined represents our lifetime as a species, then the thickness of the stamp alone represents the extent of recorded human civilisation. Now imagine placing the coin on top of a 20-metre tall obelisk. If the stamp represents the entire sweep of human civilisation, the obelisk represents the age of the Earth. Now we can consider the future. A 5-metre tree placed atop the obelisk represents the Earth's habitable future. And behind this arrangement, the height of the Matterhorn mountain represents the habitable future of the universe.

Of course, the survival of humanity across these vast timescales is only desirable if the lives of future people are worth living. Fortunately, there are reasons to suspect that the future could be extraordinarily good. We have already made staggering progress: the fraction of people living in extreme poverty fell from around 90% in 1820 to less than 10% in 2015 (and the absolute numbers are declining also). Over the same period, child mortality fell from over 40% to less than 5%, and the number of people living in a democracy increased from less than 1%, to most people in the world. But even further progress is possible, and we can hope that further scientific and medical breakthroughs will continue to improve lives in the future.

Of course, the world has a long way to go before it is free of immediately pressing problems like extreme poverty, injustice, animal suffering, and destruction from climate change. Pointing out the scope of positive futures open to us should not mean ignoring today's problems. In fact, fully appreciating how good things might eventually be could be an extra motivation for working on them — it means the pressing problems of our time needn't be perennial: if things go well, solving them now could come close to solving them for good.

The long-term future could be extraordinary, but that’s not guaranteed. It might instead be bad: perhaps characterised by stagnation, an especially stable kind of totalitarian political regime, or ongoing conflict. That is no reason to give up on protecting the long-term future. On the contrary, noticing that the future could be very bad should make the opportunity to improve it seem more significant — preventing future tragedies and hardship is surely just as important as making great futures more likely.

We don’t know exactly what humanity's future will look like. What matters is that the future could be extraordinarily good or inordinately bad, and it is likely vast in scope — home to most people who will ever live, and most of what we find valuable today. If we could make it more likely that we do eventually reach this potential, or if we could otherwise improve the lives of thousands of generations hence, that could matter enormously.

Our actions could influence the long-term future

Are there things we could do now which might reliably improve or safeguard the very long-run future? For people living in long stretches of the past, the answer may indeed have been ‘no’. Yet, there are compelling reasons for thinking that this moment in history could be a point of unusual influence over humanity’s future.

One clear example is climate change. We now know beyond reasonable doubt that human activity disrupts Earth's climate, and that climate change will have devastating effects. We also know that some of these effects could last a very long time, because carbon dioxide can persist in the Earth's atmosphere for tens of thousands of years. But we have control over how much damage we cause, such as by redoubling efforts to develop green technology, building more zero-carbon energy sources, and pricing carbon emissions in line with their true social cost. For these reasons, longtermists have strong reasons to be concerned about climate change, and many are actively working on climate issues. Mitigating the effects of climate change is a king of ‘proof of concept’ for positively influencing the long-run future; but it’s not the only example.

Many people still alive today were children when humanity first learned how it might eventually destroy itself. In July of 1945, the first nuclear weapon was detonated at the Trinity Site in New Mexico. Asides from its immediate devastation, large-scale nuclear war could precipitate a severe and prolonged ‘nuclear winter’ — potentially leaving large numbers of casualties across the world from widespread crop failure. Yet, since the Trinity test, nuclear weapons proliferated into the tens of thousands, many still on hair-trigger alert today.

This suggests the possibility of an existential catastrophe — an event which permanently curtails human potential, such as by causing human extinction. Other than the obvious fact that an existential catastrophe could cause untold losses for living people, working to prevent an existential catastrophe this century also makes it more likely that thousands of generations of future people get to live in the first place: a clear positive impact on the long-run future.

Unfortunately, nuclear weapons don’t look like the only potential cause of an existential catastrophe. Consider biotechnology. We've seen that pandemics like COVID-19 can have devastating effects. But with modern biotechnology, it will become possible to engineer pathogens to be far more deadly or transmissible than naturally occurring pathogens — threatening not just millions, but potentially billions of lives. And the barriers to engineering a pandemic are likely to drop even further over the coming decades: the first project to map the entire human genome took around 15 years and half a billion dollars to complete (in 2003). Today, a full genome can be sequenced in just under an hour, or for around $1,000. And while DNA synthesis is still costlier, its price has already fallen by a factor of more than 1,000.

Second, consider artificial intelligence. Leading experts on AI, such as Stuart Russell, are increasingly trying to warn us about the dangers, as well as the benefits, that advanced AI could bring. First, experts note that it is entirely plausible we will reach general-purpose, greater than human-level AI within either our lifetimes or in the lifetimes of our children. This could radically transform all aspects of life. Consider how humans have almost full control over other primates, such as by confining them to zoo exhibits for entertainment. Things didn’t turn out this way because we had a strength advantage, or even because we especially wanted to subjugate other primates, but on account of our intelligence. Can we be sure that things will go well if we create machines that are to us as we are to other primates? AI experts therefore also worry that such powerful AI systems could end up with the wrong values, and thereby pose an existential threat. The problem of designing safe AI systems aligned with good values is a difficult one, but failing to solve it could effectively mean losing control over the long-term future. Solving AI alignment could therefore be among the most important challenges we face this century.

We mention the examples of nuclear war, engineered pandemics, and unaligned AI not because they are necessarily the biggest risks to humanity’s long-run potential, but because they illustrate some general points about existential risks. A century ago, close to nobody was able to conceive of these risks at all. As we continue to invent ever more powerful technologies, perhaps that trend will continue. This century could be a time of unusual vulnerability for our highly-networked global society.

The Oxford philosopher Toby Ord summarises the moral significance of existential risks in his book The Precipice:

When I think of the millions of future generations yet to come, the importance of protecting humanity’s future is clear to me. To risk destroying this future, for the sake of some advantage limited only to the present, seems to me profoundly parochial and dangerously short-sighted. Such neglect privileges a tiny sliver of our story over the grand sweep of the whole; it privileges a tiny minority of humans over the overwhelming majority yet to be born; it privileges this particular century over the millions, or maybe billions, yet to come.

In addition to preventing existential risks, we might be able to positively influence the long-term future in other ways. For instance, we could petition to reform our political institutions to represent the interests of future generations: people who will live with the effects of policy decisions today, but who have no voice with which to influence them. This could take the form of national committees and offices, new voting methods, or international frameworks and panels. There is good news on this front: the secretary-general of the United Nations recently announced an agenda to consider setting up new UN projects focused on protecting future generations.

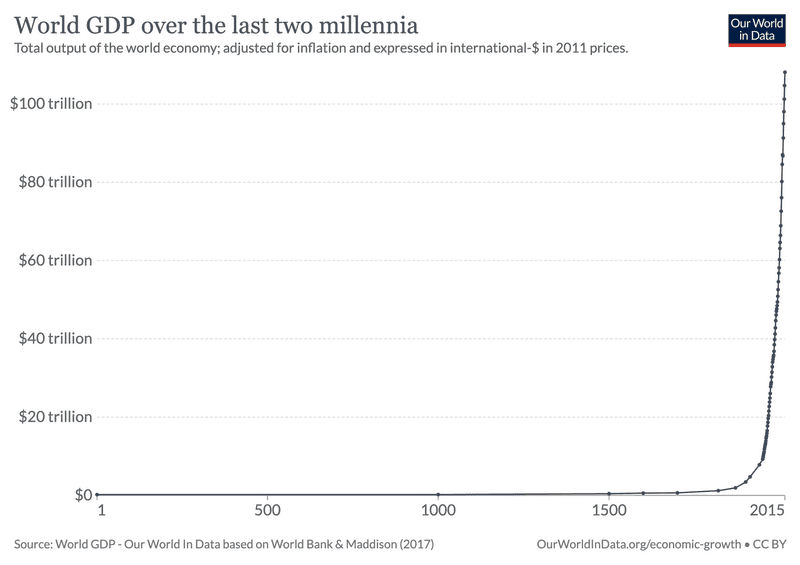

Now might be a time of unusual influence over the values that end up being held by many successive generations. History suggests that periods of rapid change are often periods where decisions about political or moral values are usually contingent and unpredictable, but then ossify over long stretches of time. And we are living in a period of rapid change, not just because of the technological advances described above, but also because the world is becoming increasingly culturally and politically globalised, and because we are living through a period of steady economic growth that looks meteoric when set in the context of the long sweep of human history.

If bad or undemocratic values soon get ‘locked-in’ for a very long time, and we fail to prevent that, then we may have failed succeeding generations. That points towards another way to positively influence the long-term future: by making it less likely that a single set of moral, cultural, or political values somehow come to dominate prematurely, before every group and perspective can be heard.

Putting things together

Many people would agree that, morally speaking, future generations deserve our moral consideration just like our own; that we do not intrinsically matter more than people in the future. This moral claim may be easy to accept partly because it’s not clear how taking it seriously could alter our priorities. Instead, it’s very natural to assume that there is nothing we could do to improve the lives of future people thousands or millions of years hence. Perhaps the effects of our efforts will quickly fade over time, or perhaps we are so uncertain about the future that we can’t be sure any of our efforts will end up being good or bad. Yet, as we’ve seen, reflecting on our moment in history suggests things we can do now to influence the long-term future — or even determine whether future generations will get to live at all.

Truly taking time to appreciate what we might achieve over the long-term makes this possibility look remarkably significant: our decisions now might be felt, in a significant sense, by billions of people yet to be born.

Concretely, this could mean working to develop better countermeasures for future pandemics, like a system for detecting novel pathogens early on. It could mean lobbying for political institutions that protect future generations, or submitting proposals to the Summit on the Future in 2023. Or it could mean doing research in economics, history, law, or philosophy to figure out other mechanisms for influencing the long-run future, or technical research making sure that powerful AI systems end up making transparent, legible decisions which are aligned with human values.

Conclusion

It’s understandable to react by thinking these ideas sound too weird, or too much like sci-fi. Of course, you don’t need to find all of this convincing to find some aspect of longtermism valuable. Longtermism is not a single, narrow view about exactly what we should be prioritising and why. Instead, it’s a broad perspective which can and should tie together all kinds of research.

But we shouldn’t disregard these ideas just because they sound outlandish: moral ideas that once seemed avante-garde, like the idea of treating animals humanely, are now commonplace today. The long-term future could be very weird, it could be truly remarkable in scope and achievement, it could end prematurely and tragically. But it almost certainly won’t look like how things are today.

We are still very uncertain about the best ways to make sure the future goes well. But it does seem extremely overconfident to claim there’s nothing we can do now to help people over the very long-run future.

Therefore, longtermism suggests that we don’t give up in the face of this uncertainty, but we instead make progress on understanding even more — because our choices this century could determine which future we end up in. Our point in history suggests this project may be a key priority of our time. And there is much more to learn.

This was a brief introduction to a big topic, and there are all sorts of avenues you could read more about. For some places to start, see the 'resources' page on this website. If you’re curious about how you might contribute to any of this, take a look at the ‘get involved’ page.